In the ever-evolving landscape of artificial intelligence, OpenAI stands at the forefront, pioneering breakthroughs that redefine our interactions with technology. Among their stellar contributions, OpenAI’s Natural Language Processing (NLP) models have emerged as trailblazers, reshaping the way we comprehend, communicate, and innovate. OpenAI’s commitment to pushing the boundaries of AI is epitomized by two standout NLP models: PEGASUS and GPT (Generative Pre-trained Transformer). Each of these models brings a unique set of capabilities to the table, transforming the realm of language processing in unprecedented ways. In this blog post, we embark on a fascinating journey through the corridors of OpenAI’s NLP innovations. From the ingenious extractive summarization prowess of PEGASUS to the awe-inspiring language generation capabilities of GPT, we will uncover how these models are redefining natural language understanding and generation. Get ready to delve into the future of communication—where language is not just processed; it is understood, synthesized, and utilized in ways that were once the realm of science fiction.

What is Pegasus Model?

PEGASUS is a natural language processing (NLP) model developed by Google Research. It is specifically designed for abstractive text summarization, where the model generates a concise and coherent summary that captures the essential information from a given document.

Here are some key features and aspects of the PEGASUS model:

- Pre-training: PEGASUS is pre-trained on a large corpus of diverse data using a denoising autoencoder objective. During pre-training, the model learns to understand the structure and meaning of text, allowing it to generate high-quality abstractive summaries later on.

- Abstractive Summarization: Unlike extractive summarization, where the model selects and outputs existing sentences from the document, PEGASUS performs abstractive summarization. This means it can generate new sentences that capture the main ideas of the input text.

- Fine-tuning for Summarization Tasks: PEGASUS can be fine-tuned on specific summarization tasks, adapting the model to generate summaries that align with the requirements of a particular domain or dataset.

- Importance Ranking: The model is trained to rank sentences in terms of importance, which aids in the summarization process. This helps PEGASUS identify and include key information in the generated summaries.

- Wide Applicability: PEGASUS has demonstrated effectiveness in various summarization tasks, including news articles, scientific papers, and other types of documents. Its versatility makes it a valuable tool for condensing information across different domains.

Amazing facts about Pegasus model

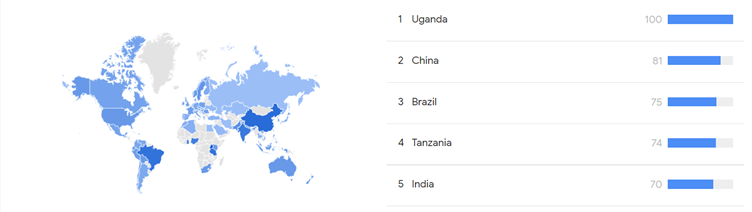

On an average 80 times every month, this Pegasus model is searched for the last year.

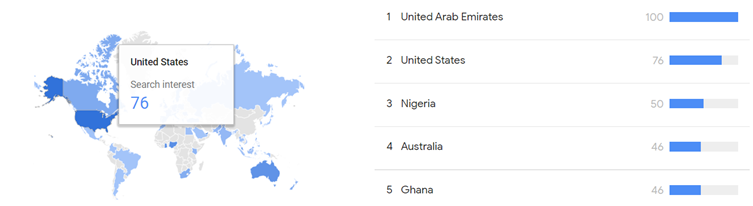

From Google Trends, we can see Uganda is leading in the countries list in this field.

PaLM Models

Pathways Language Model (PaLM) is a large language model (LLM) developed by Google AI. PaLM is a transformer-based language model. Transformers are a type of neural network that are particularly well-suited for natural language processing tasks. They work by learning to represent the relationships between words and phrases in a sentence.

PaLM is trained on a massive dataset of text and code. This dataset includes books, articles, code, and other forms of text. PaLM learns to represent the relationships between words and phrases in this dataset. This allows it to perform a wide range of tasks, such as translation, summarization, question answering, code generation, and creative writing.

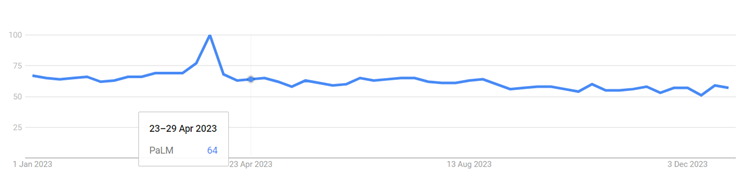

Google Trends facts:

On an average 64 times per month, topics related to PaLM has been searched worldwide in the last one year.

UAE is leading the list in this field

Some understanding of NLP models

Natural Language Processing (NLP) models play a crucial role in understanding and generating human language. Here are some important things to know about NLP models:

1. Types of NLP Models:

- Rule-Based Systems: Traditional systems relying on handcrafted linguistic rules.

- Statistical Models: Utilize statistical methods to analyze language patterns.

- Machine Learning Models: Trainable models that learn patterns from data.

- Deep Learning Models: Neural network-based models, such as transformers, recurrent neural networks (RNNs), and long short-term memory networks (LSTMs).

2. Pre-trained Models:

- Many recent advancements involve pre-training models on large datasets before fine-tuning for specific tasks.

- Examples include BERT (Bidirectional Encoder Representations from Transformers) and GPT (Generative Pre-trained Transformer).

3. Transfer Learning:

- Transfer learning allows models trained on one task to be adapted for another task.

- Pre-trained models often serve as strong starting points for various NLP tasks.

4. Common NLP Tasks:

- Named Entity Recognition (NER): Identifying entities (e.g., names, locations) in text.

- Part-of-Speech Tagging (POS): Assigning grammatical categories to words.

- Sentiment Analysis: Determining the sentiment expressed in a piece of text.

- Machine Translation: Translating text from one language to another.

- Text Summarization: Generating concise summaries of longer texts.

- Question Answering: Providing answers to user queries based on a given context.

5. Attention Mechanism:

- Transformers, a type of NLP model, introduced the attention mechanism.

- Attention enables the model to focus on different parts of the input sequence when making predictions.

6. BERT and Contextual Embeddings:

- BERT introduced bidirectional context in pre-trained embeddings.

- Contextual embeddings capture the meaning of a word based on its surrounding words in a sentence.

7. Ethical Considerations:

- NLP models can unintentionally learn biases present in training data.

- Ethical considerations, fairness, and mitigating biases are essential aspects of NLP development.

8. Evaluation Metrics:

- Accuracy, precision, recall, F1 score, and BLEU score are common metrics for evaluating NLP models.

9. Challenges:

- Ambiguity, context understanding, and handling out-of-distribution data are persistent challenges.

- Developing models that understand context and nuances in language remains an ongoing area of research.

10. Continual Advancements:

- The field of NLP is dynamic, with continual advancements, new models, and techniques emerging regularly.

- Keeping up with the latest research is crucial for staying informed about state-of-the-art models and practices.

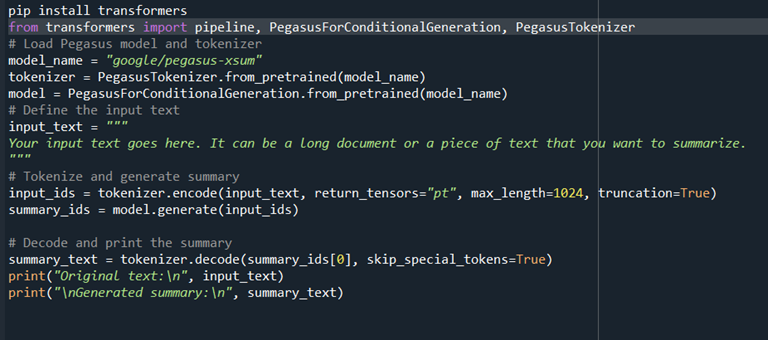

Coding of Pegasus model:

Important points on Pegasus and PaLM:

1.PEGASUS:

Use Case: PEGASUS is primarily designed for abstractive text summarization.

Key Features:

- Operates at the sentence level.

- Utilizes a masked language modelling objective during pre-training.

- Demonstrates state-of-the-art performance on summarization benchmarks.

- Released as an open-source implementation, allowing researchers and developers to use and experiment with the model.

Applications:

- Abstractive summarization of documents, articles, and other text data.

2.PaLM (Passage Language Model):

Use Case:

- PaLM is designed to model passages of text, specifically for question-answering tasks where understanding context within passages is crucial.

Key Features:

- Focuses on passage-level understanding.

- Aimed at capturing context and relationships within passages for better question-answering performance.

- Utilizes pre-training and fine-tuning strategies.

- Contextualizes information within passages to improve the comprehension of queries.

Applications:

- Passage-level question-answering, where the model needs to understand and answer questions based on information contained in passages.

Possible Use Cases and Synergies:

1. Summarization Followed by Question-Answering:

- PEGASUS can be used to generate abstractive summaries of documents or articles.

- PaLM can then leverage these summaries for question-answering tasks, focusing on the key information extracted by PEGASUS.

2. Document Understanding:

- PEGASUS can be employed for summarizing lengthy documents, providing a condensed version of the content.

- PaLM, in turn, can process and comprehend these summaries, assisting in tasks such as document-level question-answering or information retrieval.

3.Multimodal Applications:

- Both models may be integrated into larger systems or pipelines that involve not only text but also other modalities (e.g., images, graphs).

- The combined use of PEGASUS and PaLM can contribute to a comprehensive understanding of multimodal information.

Future of NLP models:

1. Large Pre-trained Models:

- The trend of developing ever-larger pre-trained language models, like GPT-3, was prominent. Continued exploration of model scaling and architecture improvements could lead to even more powerful language representations.

2. Multimodal Models:

- Integrating language understanding with other modalities such as images, audio, and video was gaining attention. Multimodal models aim to comprehend and generate content across different data types, allowing for more comprehensive AI applications.

3. Efficiency and Model Compression:

- As large models come with computational challenges, there is a growing interest in making NLP models more efficient. This includes exploring model compression techniques, quantization, and developing smaller models that can perform well on specific tasks.

4. Few-shot and Zero-shot Learning:

- Advances in few-shot and zero-shot learning allow models to generalize to new tasks with limited examples or even without any task-specific training examples. This could lead to more flexible and adaptable NLP models.

5. Domain-Specific and Specialized Models:

- The development of NLP models tailored to specific domains or industries was becoming more prevalent. Customized models trained on domain-specific data can provide better performance for applications.

6. Explainability and Interpretability:

- There is a growing emphasis on making NLP models more interpretable and explainable. Understanding and justifying the decisions made by models are crucial for building trust and facilitating their deployment in real-world applications.

7. Continual Learning:

- The ability of models to learn continuously over time without forgetting previously learned tasks is a crucial area of research. This is especially important as NLP models are applied to a wide range of tasks in dynamic environments.

8. Robustness and Adversarial Défense:

- Enhancing the robustness of NLP models against adversarial attacks and biases is a key concern. Research efforts focus on developing models that are more resilient and less susceptible to manipulation.

9. Ethical Considerations:

- Addressing ethical concerns, including bias mitigation, fairness, and responsible AI practices, is gaining importance. There is an increased focus on developing models that are aware of and capable of addressing societal and ethical challenges.

10. Interactive and Conversational AI:

- Improving the naturalness and interactivity of conversational agents is an ongoing area of research. More advanced dialogue systems that can understand context, exhibit empathy, and engage in coherent and meaningful conversations are being pursued.

Conclusion

In the ever-evolving landscape of Natural Language Processing (NLP), models like PaLM and PEGASUS stand as testament to the relentless pursuit of excellence in understanding and generating human-like text. As we traverse the realms of abstractive summarization with the majestic wings of PEGASUS and delve into the intricate passages of contextual comprehension with PaLM, it becomes evident that the future of NLP is both thrilling and promising. PaLM, with its focus on mastering the art of passage-level understanding, opens doors to a world where machines can truly grasp the nuances and intricacies within textual passages. This specialized approach in the quest for better question-answering capabilities paints a picture of NLP models not just as processors of words but as interpreters of context-rich information. On the other hand, PEGASUS soars to new heights in the realm of abstractive summarization. Its ability to distil the essence of lengthy documents into concise, coherent summaries mirrors the elegance of a well-crafted narrative. PEGASUS not only summarizes text but breathes life into the art of compression, presenting an exciting paradigm for distillation and synthesis of information. Thanks for having patience to read my blog. If you liked it give feedback in the comment session.