In the vast landscape of artificial intelligence, language models have emerged as powerful tools that can understand, generate, and manipulate human-like text. One fascinating aspect of harnessing these models lies in the art and science of prompt engineering—a skill that can unlock the true potential of these language behemoths. In this blog series, we will unravel the intricacies of prompt engineering from the basics of constructing effective queries to the nuances of fine-tuning prompts for specific tasks. In this blog we would be looking at the application of prompt engineering that is PAL. Go through the entire blog to under the concept of PAL to understand the importance of Prompt Engineering. So, let us begin our journey.

What is PAL?

Before we understand PAL,let us first understand what LLM is. In the context of artificial intelligence and natural language processing, “LLMs” could refer to Large Language Models. These are powerful machine learning models, such as OpenAI’s GPT (Generative Pre-trained Transformer) series, which are trained on massive amounts of data to understand and generate human-like text. Large language models (LLMs) have recently demonstrated an impressive ability to perform arithmetic and symbolic reasoning tasks, when provided with a few examples at test time (“few-shot prompting”). Much of this success can be attributed to prompting methods such as “chain-of-thought”, which employ LLMs for both understanding the problem description by decomposing it into steps, as well as solving each step of the problem. While LLMs seem to be adept at this sort of step-by-step decomposition, LLMs often make logical and arithmetic mistakes in the solution part, even when the problem is decomposed correctly.

we present Program-Aided Language models (PAL): a novel approach that uses the LLM to read natural language problems and generate programs as the intermediate reasoning steps, but offloads the solution step to a runtime such as a Python interpreter. With PAL, decomposing the natural language problem into runnable steps remains the only learning task for the LLM, while solving is delegated to the interpreter.

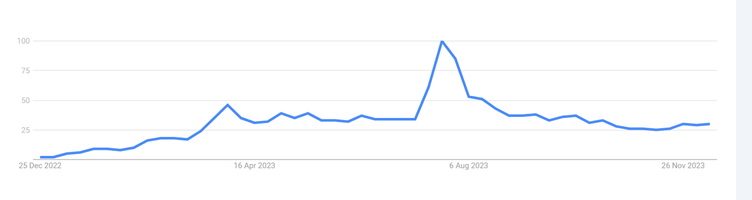

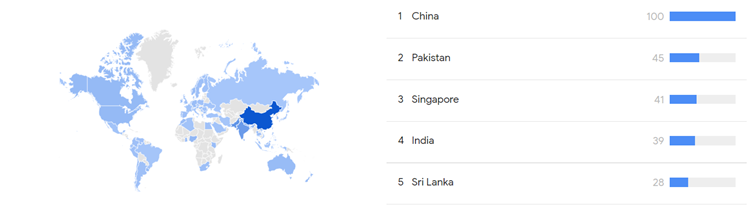

Let us understand some interesting facts and figures of Prompt Engineering and Large Language Models:

Nearly on an average of 50 times per month, Prompt Engineering topics have been searched worldwide for the last year.

China is leading the list in searching for these advanced topics.

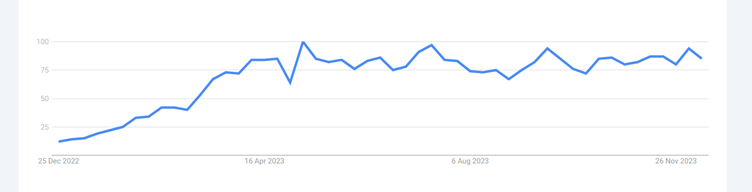

That was about Prompt Engineering. Now let us check about Large language models.

Nearly on an average of 70 times per month, Large language models topics have been searched worldwide for the last year.

Here also China is leading the list.

More insights about PAL

Let us first understand about chain of thought engineering. “Chain of thought” prompt engineering involves constructing prompts in a way that encourages a language model to generate a sequence of connected and coherent ideas. It’s about guiding the model’s thinking in a logical progression.

In this example, the prompt is designed to lead the language model through a logical sequence:

1. Historical Roots and Evolution of AI:

- The model is prompted to start by delving into the origins of artificial intelligence, tracing its historical development.

2. Key Breakthroughs:

- Following the historical overview, the model is guided to explore significant breakthroughs and advancements in the field of AI.

3. Ethical Considerations:

- The prompt then directs the model to discuss ethical considerations related to AI, fostering a thoughtful exploration of the topic.

4. Future Developments:

- Finally, the model is instructed to speculate on potential future developments in artificial intelligence, completing the chain of thought.

Let us check a code of Prompt Engineering:

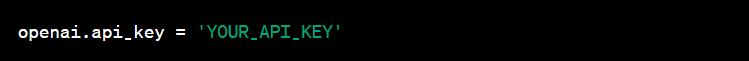

1. Import OpenAI Library and Set API Key:

This line imports the OpenAI library, which provides access to the OpenAI GPT-3 API. Replace ‘YOUR_API_KEY’ with your actual OpenAI API key. You need a valid API key to make requests to the OpenAI API.

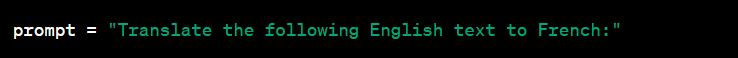

2. Define Prompt:

This line defines a prompt that will be used to instruct the model. In this case, it indicates that the user wants to translate English text to French.

3. Combine Prompt and User Input:

This line combines the predefined prompt and the user’s input to create a complete prompt for the translation task.

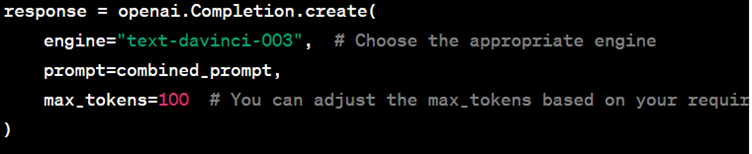

4. Call OpenAI API:

This line calls the OpenAI API (openai.Completion.create) with the specified parameters. It sends the combined prompt to the API and receives a response.

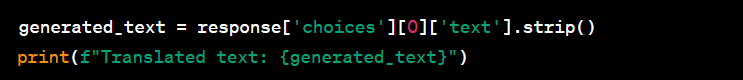

5. Extract and Print Translated Text:

This part extracts the generated text from the API response and prints it as the translated text.

Uses of Prompt Engineering:

1. Task Customization:

- Example: When using a language model for a specific task, such as text completion, translation, summarization, or question-answering, prompt engineering allows users to tailor their queries to elicit desired responses.

2. Improving Specificity:

- Example: In a language model used for generating creative writing, prompt engineering helps users specify the tone, style, or context they want in the generated content.

3. Mitigating Bias:

- Example: Engineers can design prompts that encourage the model to produce more unbiased or neutral responses, helping to address potential bias issues in the model’s outputs.

4. Controlling Output Length:

- Example: By adjusting the prompt or using constraints, users can control the length of the generated text, ensuring it fits within specified limits.

5. Domain Adaptation:

- Example: Engineers can use prompt engineering to adapt a general-purpose language model to a specific domain or industry, ensuring more relevant and accurate responses.

6. Enhancing Specific Features:

- Example: For a language model used in code generation, prompt engineering allows developers to guide the model to focus on certain programming languages, libraries, or coding styles.

7. Generating Multiturn Conversations:

- Example: In a conversational AI system, prompt engineering can be used to simulate a conversation by providing appropriate context and prompts for both user and system turns.

8. Fine-tuning and Training:

- Example: During the fine-tuning process of a language model, prompt engineering is often used to create training data that is representative of the desired behaviour or to address specific challenges in the target application.

9. Translating Instructions:

- Example: When using a language model for a task that requires following instructions, prompt engineering helps provide clear and unambiguous instructions to achieve the desired outcome.

10. Adapting to User Preferences:

- Example: In chatbot applications, prompt engineering can be used to make the chatbot’s responses align with the user’s preferred language, tone, or communication style.

Interesting facts about PAL:

Prompt engineering is an intriguing aspect of working with language models, and it plays a significant role in shaping the behaviour and output of these models. Here are some interesting facts about prompt engineering:

1. Creative Control:

- Prompt engineering gives users a form of creative control over language models. By carefully crafting prompts, users can guide the model to generate content that aligns with specific styles, tones, or themes.

2. Influence on Bias:

- Prompt engineering can be used to mitigate bias in language models. By constructing unbiased prompts and carefully framing questions, developers can influence the model to generate more neutral and fair responses.

3. Iterative Process:

- Successful prompt engineering often involves an iterative process. Developers and users may need to experiment with different prompts, fine-tune their approaches, and observe the model’s responses to achieve the desired behaviour.

4. Task Adaptation:

- Prompt engineering enables the adaptation of language models to different tasks and domains. Developers can design prompts that align with specific applications, making the models more versatile and applicable across a range of scenarios.

5. Combination with Constraints:

- Combining prompt engineering with constraints allows users to exert more fine-grained control over the model’s outputs. Constraints can be used to control factors such as response length, language style, or other specific characteristics.

6. Human-in-the-Loop Collaboration:

- In some applications, prompt engineering involves collaboration between humans and language models. Users can iteratively refine prompts based on the model’s responses, creating a dynamic interaction between human intelligence and machine-generated content.

7. Transfer Learning Challenges:

- Language models, even with sophisticated prompt engineering, might struggle with transfer learning when moving from one domain to another. Fine-tuning and adapting prompts become crucial in overcoming these challenges.

8. Interplay with Model Architecture:

- Different language models may respond differently to prompt engineering based on their underlying architectures. Understanding the nuances of the model’s architecture helps in crafting more effective prompts.

9. Evolving Strategies:

- As language models advance and new iterations are released, prompt engineering strategies may need to evolve. Staying abreast of model updates and adapting prompt engineering techniques accordingly is important.

10. Ethical Considerations:

- Prompt engineering raises ethical considerations, especially when dealing with potentially biased outputs. Researchers and developers must be mindful of the impact of prompts on the fairness and inclusivity of the model’s responses.

Conclusion:

In conclusion, PAL is a fascinating and powerful technique in the realm of natural language processing (NLP) that empowers users and developers to shape the behaviour of language models. In essence, PAL empowers us to navigate the intricate landscape of language models, providing a means to achieve tailored and desired outcomes. The impact of prompt engineering in today’s era is profound, influencing various aspects of natural language processing (NLP) and AI applications. It empowers users, enhances model performance, addresses ethical considerations, and plays a crucial role in shaping the future of AI applications. As technology continues to advance, the role of prompt engineering is likely to become even more central in optimizing and refining language model outputs. Thanks for having patience to read out this blog. If you like this blog, give your feedback in the comment section.